AI Data Pipeline (Beta)

Securely connect your digital workplace data to Large Language Models (LLMs) via Retrieval-Augmented Generation (RAG).

Your enterprise data + LLMs

Using generative AI with your enterprise data unlocks powerful possibilities for your organization. Successful generative AI adoption starts with a strong data foundation. With Onna, you can build a centralized repository for unstructured data from your digital workplace tools, and create a secure pipeline that connects your data to LLMs.

Contact us to learn moreHow to create an AI Data Pipeline with Onna

-

1. Centralize

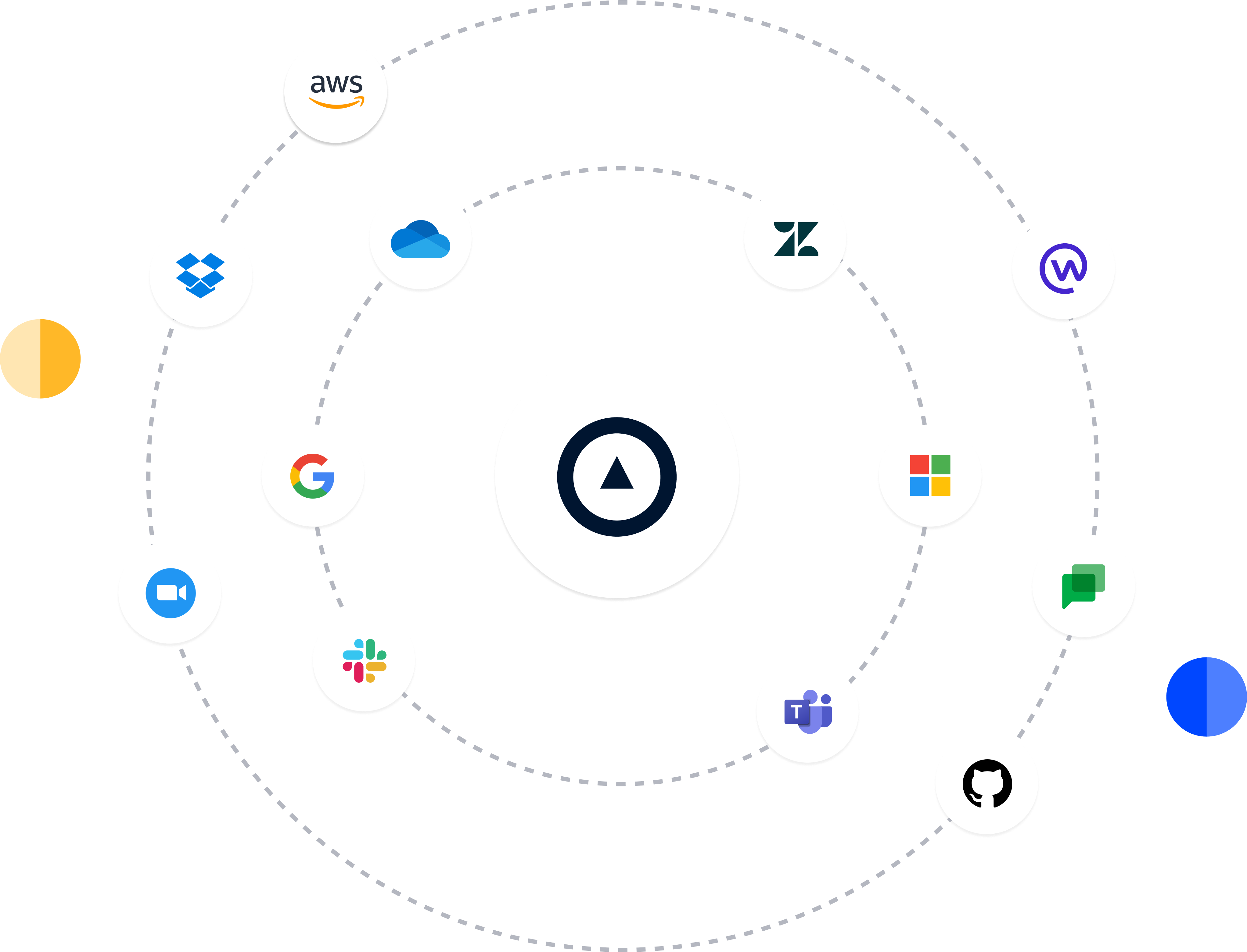

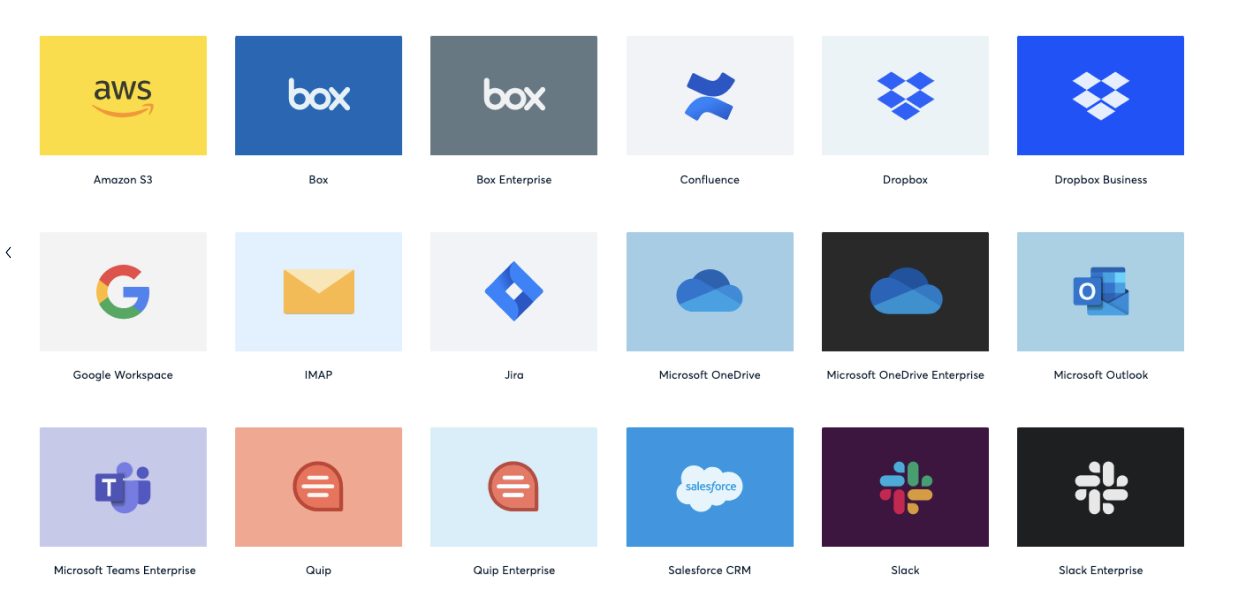

Centralize unstructured data from your digital workplace

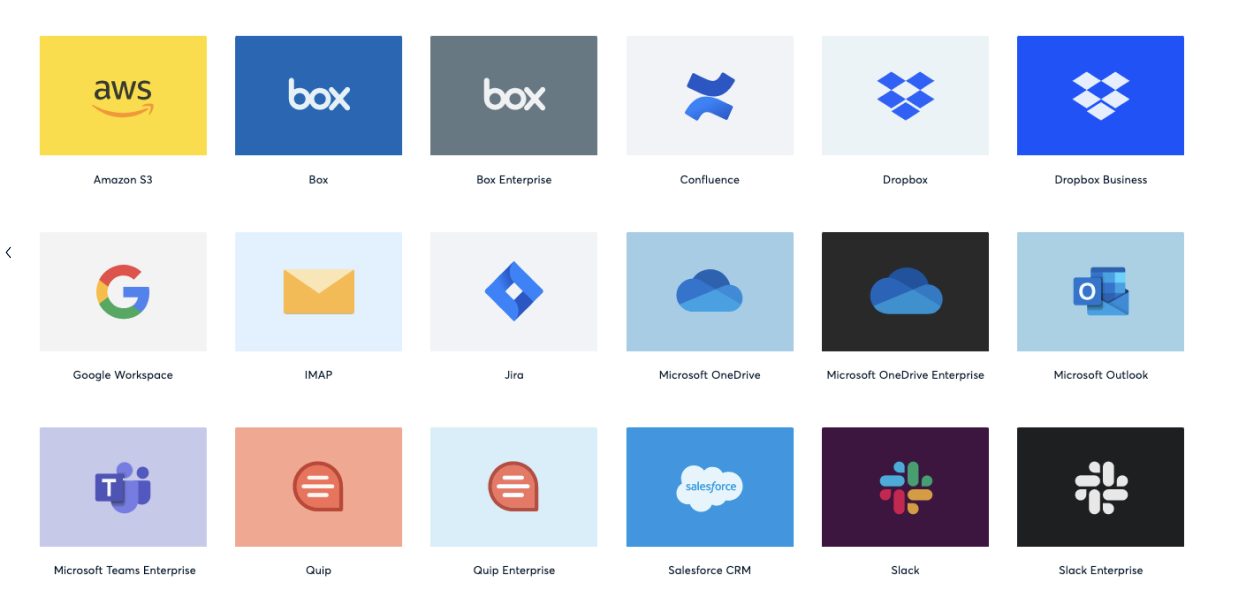

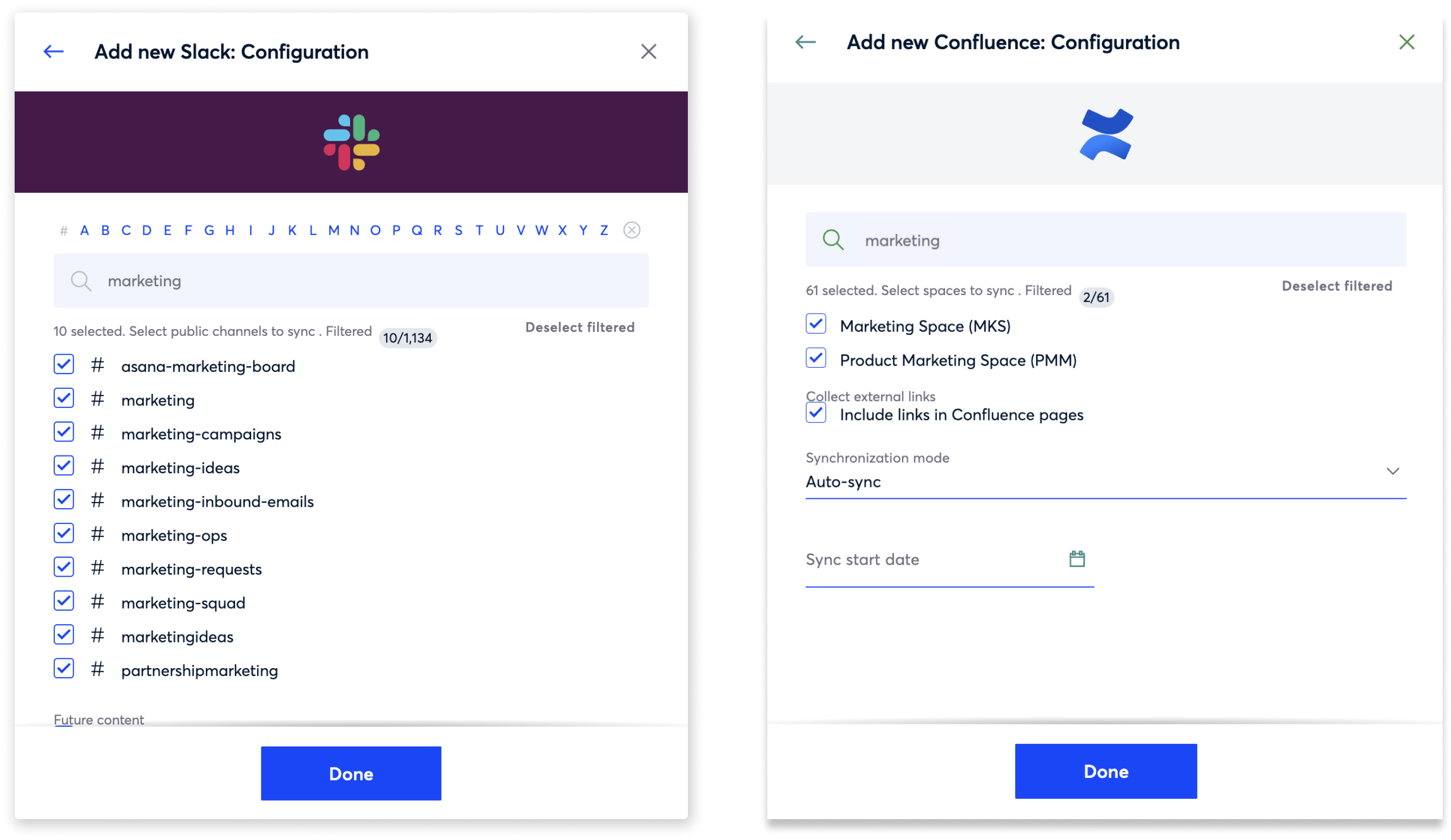

Use no-code connectors for common collaboration, communication, and chat tools to centralize the data you want to use with LLMs.

Don't see a connector for one of your data sources? Learn more about how to use our Platform API to ingest data from custom sources.

-

2. Curate

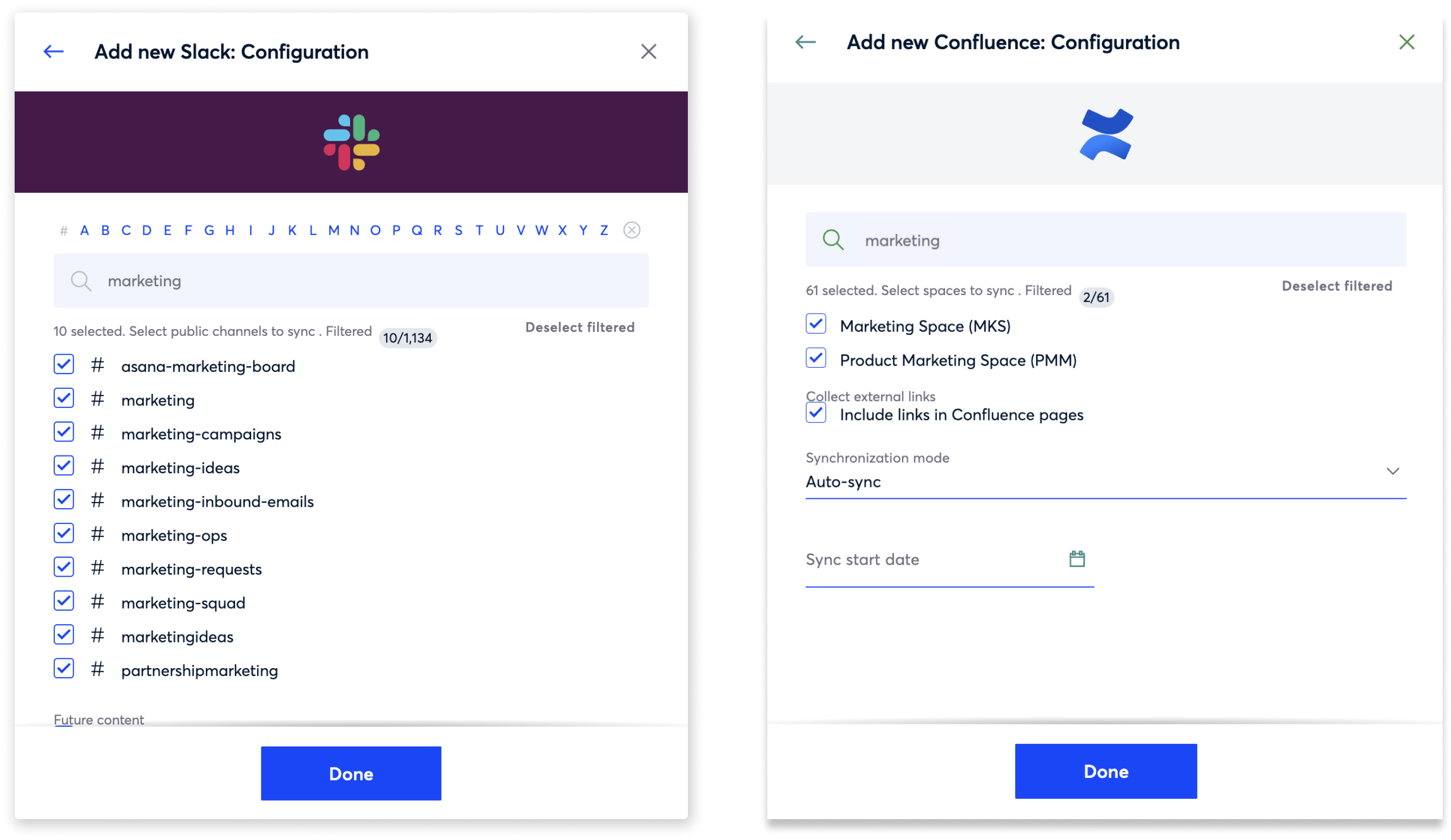

Curate an LLM-ready dataset

Prepare data for use with LLMs by creating highly curated datasets. Use source-specific parameters to ingest exactly what you need. Visibility into the data allows you to identify what you want to use and filter out what you don't.

-

3. Transform

Automatically transform data into a useable format and calculate vector embeddings

Onna's processing pipeline transforms and standardizes unstructured data into a useable asset. In addition to processing and indexing multiple file types, Onna will calculate and store vector embeddings to power a Retrieval-Augmented Generation (RAG) workflow.

-

4. Manage

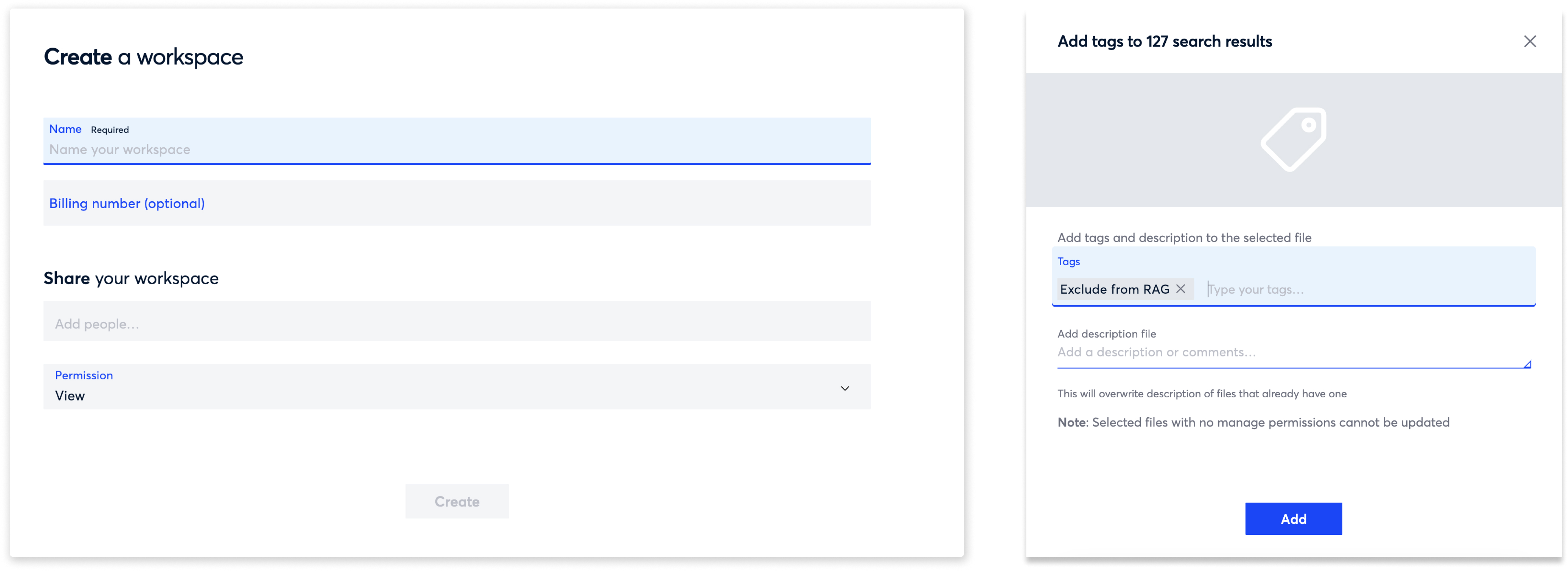

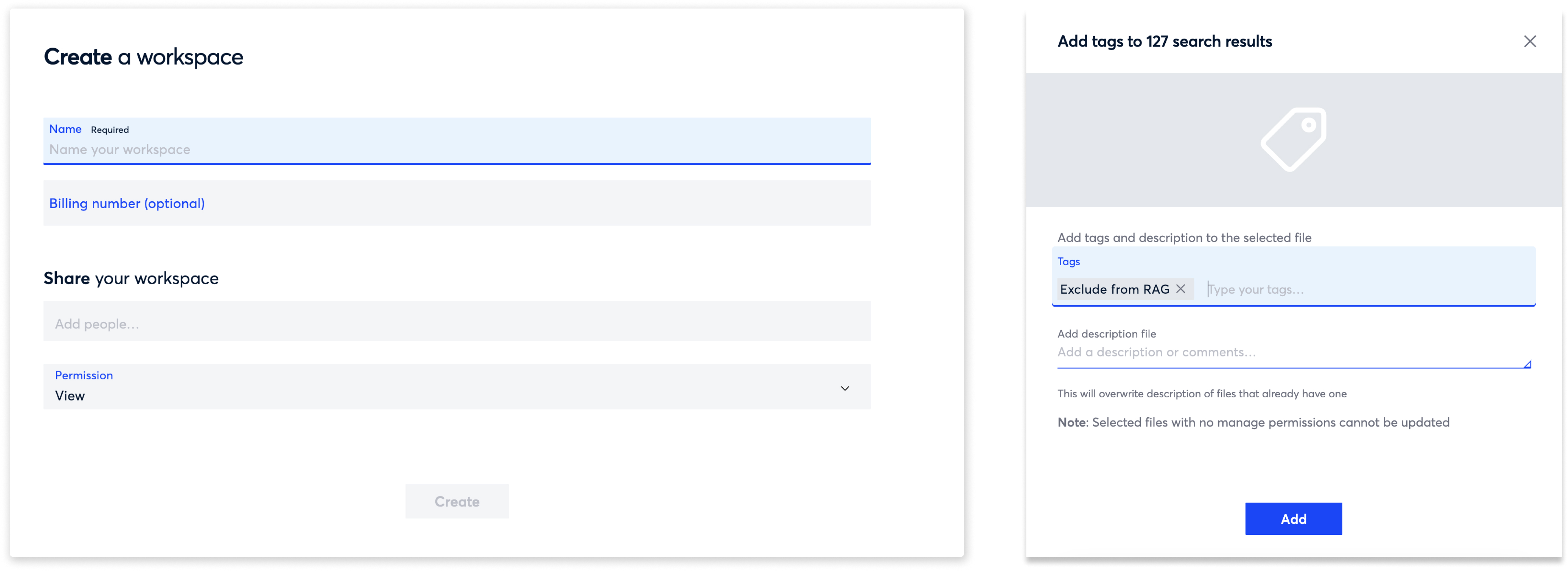

Strategically organize and manage data in Onna

Organize data into Workspaces so you can further audit and examine data used with LLMs. Search, tag, and classify your data, and securely invite team members to each Workspace to collaborate on your data management initiatives.

-

5. Connect

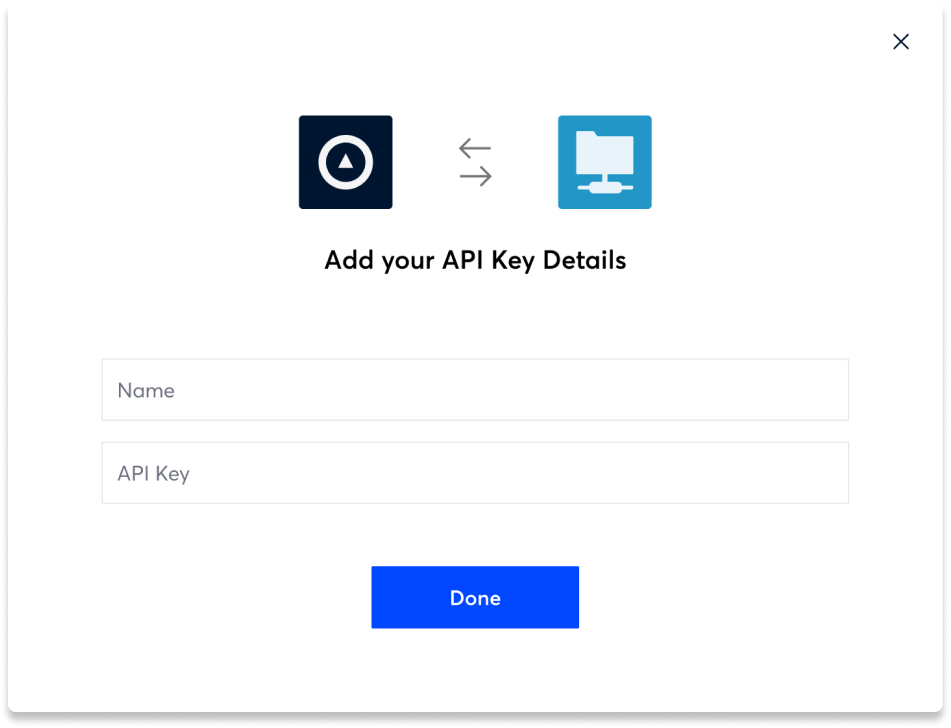

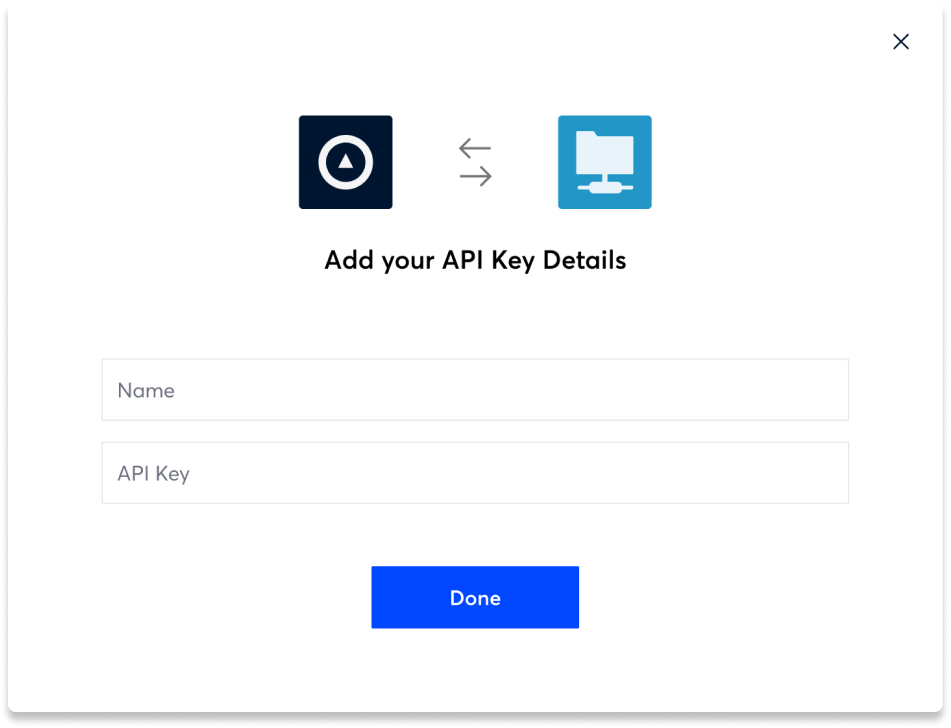

Select & connect to your preferred LLM

Onna's platform is LLM agnostic, so you can leverage your preferred LLM. Use API keys to connect your curated dataset to the LLM you want to use.

-

6. Activate

Empower users across your business with generative AI, built on your data

With your enterprise data centralized, curated, and LLM-ready, you can create business-specific generative AI applications. Whether it's a chatbot for the marketing team, or a contract analysis tool for the legal team, or something else, you can use Onna to leverage LLMs with your data.

Reach out to Onna's team to learn more about how we can support your generative AI initiatives.

Contact us to learn more

Centralize unstructured data from your digital workplace

Use no-code connectors for common collaboration, communication, and chat tools to centralize the data you want to use with LLMs.

Don't see a connector for one of your data sources? Learn more about how to use our Platform API to ingest data from custom sources.

Curate an LLM-ready dataset

Prepare data for use with LLMs by creating highly curated datasets. Use source-specific parameters to ingest exactly what you need. Visibility into the data allows you to identify what you want to use and filter out what you don't.

Automatically transform data into a useable format and calculate vector embeddings

Onna's processing pipeline transforms and standardizes unstructured data into a useable asset. In addition to processing and indexing multiple file types, Onna will calculate and store vector embeddings to power a Retrieval-Augmented Generation (RAG) workflow.

Strategically organize and manage data in Onna

Organize data into Workspaces so you can further audit and examine data used with LLMs. Search, tag, and classify your data, and securely invite team members to each Workspace to collaborate on your data management initiatives.

Select & connect to your preferred LLM

Onna's platform is LLM agnostic, so you can leverage your preferred LLM. Use API keys to connect your curated dataset to the LLM you want to use.

Empower users across your business with generative AI, built on your data

With your enterprise data centralized, curated, and LLM-ready, you can create business-specific generative AI applications. Whether it's a chatbot for the marketing team, or a contract analysis tool for the legal team, or something else, you can use Onna to leverage LLMs with your data.

Reach out to Onna's team to learn more about how we can support your generative AI initiatives.

Contact us to learn more

Retrieval-Augmented Generation (RAG) with Onna

RAG is a technique for optimizing LLM predictions and reducing hallucinations. It enables LLMs to reference information from domain-specific datasets to inform responses. No model retraining is required, which makes RAG a cost-effective method for developing specialized AI applications.

.png?width=3086&height=2224&name=image%20(6).png)

"Because no retraining is involved and everything is done via in-context learning, RAG-based inference is fast (sub 100ms latency), and well-suited to be used inside real time applications."

RAG vs Fine-tuning LLMs-What to use, when, and why. | John Hwang, Substack

RAG vs Fine-tuning LLMs-What to use, when, and why. | John Hwang, Substack

Learn more about RAG, GenAI use cases, and Onna's AI principles

Check out these resources to learn more about RAG and Onna's approach to AI.

Blog

What is Retrieval-Augmented Generation (and why should every legal professional know about it)?

Learn more

Join the AI Data Pipeline Beta

Ready to get started? Reach out to learn more about joining the beta program or with any questions.

Other solutions

eDiscovery

Learn more

Information Governance

Learn more

eDiscovery

eDiscovery Collections

Collections Processing

Processing Early Case Assessment

Early Case Assessment Information Governance

Information Governance Data Migration

Data Migration Data Archiving

Data Archiving AI Data Pipeline (Beta)

AI Data Pipeline (Beta) Platform Services

Platform Services Connectors

Connectors Platform API

Platform API Pricing Plans

Pricing Plans Professional Services

Professional Services Technical Support

Technical Support Partnerships

Partnerships About us

About us Careers

Careers Newsroom

Newsroom Events

Events Webinars

Webinars OnnAcademy

OnnAcademy Blog

Blog Content Library

Content Library Trust Center

Trust Center Developer Hub

Developer Hub