Onna's AI story

In a recent blog post, we outlined six guiding principles for artificial intelligence (AI) at Onna. Our aim was not only to assist others in navigating the evolving AI landscape but also to promote transparency about our AI approach. This approach encompasses our practices, technology, vendors, and data usage, and we extend it to our team, customers, and partners.

Today, I’ll take you through Onna's AI journey — starting from where we began with AI, where we currently stand, where we are headed, and how we apply our principles at every step. Because ultimately, we believe that creating trust in AI requires more than just defining principles; we must actively put those principles into practice.

Onna’s data management fabric

Before we delve into the evolution of our AI, let me provide a high-level overview of how Onna solves the fragmented data problem. Onna was built to help organizations gain control over their data. Typically, this involves unstructured data that proliferates quickly over time and across multiple digital platforms. While some companies choose to tackle this challenge internally, they soon discover the steep costs and complexities associated with such an endeavor.

We’ve developed a highly automated, scalable, resilient, and extensible system to address this challenge. At its core, our system is based on the following key concepts:

- Ingestion. This consists of a set of connectors that interact with third-party APIs to regularly download data on behalf of users or administrators.

- Processing. Every piece of content ingested by a connector goes through a pipeline for text and metadata extraction. Onna supports most common workplace content types, including Office/Google files, PDFs, emails, text files, images, web pages, and container files like Zip, PST, and Mbox. The content undergoes a series of steps such as optical character recognition (OCR), language detection, entity extraction, summarization, and versioning. Each step adds further value and associates it with the original content. This process is applied to each parent file, recursively extracting all embedded children and subjecting them to the same treatment.

- Indexing. Once the processing is complete, each piece of content is indexed to facilitate search and other downstream use cases. The content is indexed in multiple variations to support different types of searches and targeted advanced searches. A robust permissioning system ensures that users can only access their own data or data shared with them.

- Actions. Once content is indexed, users can perform various actions, including searching, tagging, sharing, monitoring content, and exporting entire sources or specific search results.

With these capabilities, we initially entered the market with a focus on the eDiscovery use case, empowering legal teams to find the proverbial needles in the haystack. However, we didn't limit ourselves to developing just an eDiscovery tool; instead, we created a powerful data management platform that seamlessly transfers information from point A to B, extracts valuable insights, and enables actionable outcomes.

Early use of AI at Onna

As mentioned earlier, we have designed our processing engine to be extensible, with each step in the pipeline functioning as a distinct microservice that performs a specific task and appends its output to each piece of content. To accomplish this, we have developed microservices for the following functions:

- Entity extraction: This microservice identifies various types of personally identifiable information (PII) such as individuals, companies, locations, emails, birthdays, addresses, and social security numbers.

- Object detection: This microservice detects ID cards and passports within images.

- Summarization: This microservice generates a concise paragraph to display beneath each result on the search results page, providing a summary of the content.

- Classification: We created a locally built machine-learning model to classify documents as contracts or non-contracts.

- Language detection: This microservice determines the language used in each document.

As you can see, some of these applications serve a broad purpose, while others address very specific problems.

The GPT era

When Generative AI and Large Language Models (LLMs) started making global strides in late 2022, we at Onna quickly recognized the immense potential these technologies held. Through conversations with our customers, we found that they too were interested in leveraging these technologies to elevate the information they could extract from their data.

As chatbots became increasingly prevalent, people started engaging with them and acquiring valuable public knowledge. However, these chatbots were unable to respond to queries based on closed domain-specific enterprise data.

This sparked an idea: What if Onna could empower its customers to develop domain-specific LLMs using their own data?

Fortunately, we had a significant advantage with the content we had already ingested from various data sources on behalf of our customers. We extracted all the text and indexed it for search. This content is continuously refreshed as it updates at the source, and we’ve implemented a robust security system to ensure that interactions are limited to the data each user has access to.

Experimentation

Every year, Onna hosts a Hackathon where all employees are invited to work together and quickly prototype innovative ideas. The participants then present their concepts to the entire company for evaluation. One of the most popular ideas this year, and the eventual winner of the Hackathon, was a domain-specific chatbot trained to answer queries specific to Onna’s platform.

After the Hackathon, we formed a volunteer squad comprising engineers and product managers to carry the project forward. During the planning phase, we identified two primary use cases that we wanted to focus on:

- Answering questions and instructions through both a Chat UI and a Slack bot

- Developing a workflow to utilize Onna data for training ML models

While we’ve discussed additional use cases that may be explored in the future, our team has prioritized these specific areas to maintain a balance with our existing product strategy and fulfill our commitments to our customers.

The Onna Chatbot

We designed the chatbot as a document retrieval system using a RAG (Retrieval-Augmented Generation) workflow that capitalizes on Onna's existing internal text data in OpenSearch, as well as new-to-us tools like the Langchain framework and the Chroma embeddings database. The workflow can be summarized as follows:

- The user enters a query, which can be a question or an instruction.

- Semantically meaningful features are extracted from the query, translating it into a more structured format.

- The translated query is sent to OpenSearch to identify the most relevant documents.

- Using the Langchain framework, each document is divided into smaller chunks. Embeddings are calculated for each chunk and stored in the Chroma database.

- The embeddings for the modified query are also computed and compared with the existing embeddings to identify the most relevant text segments.

- The identified chunks are combined with the user’s query to create a comprehensive prompt.

- The prompt is submitted to a Large Language Model, which generates a response to the query.

- The response, along with references to the underlying documents, is provided to the user.

- At this stage, the user has the option to perform various actions such as tagging, sharing, or exporting the resulting documents.

It’s important to address the elephant in the room — the contribution of the LLM submission component. In our experimentation, we utilized data from Onna’s publicly available Helpdesk articles and the well-known Enron dataset. Recognizing the critical privacy concerns for a production deployment, we prioritize the usage of any model strictly on an opt-in basis. Additionally, we ensure either complete air-gapping of the model or shield it through legal contracts with a trusted partner to protect data privacy.

We evaluated various models, both open-source and closed-source, to assess their responsiveness to our instructions. At the time of writing, these models included Google's Flan-T5, Vicuna, Google's PaLM2, and OpenAI's ChatGPT.

Each model exhibited its own strengths and weaknesses, and we couldn't crown a definitive winner. As is widely known, OpenAI’s models generally provide the most accurate responses and superior performance, but they score low on the privacy scale. We lack assurances about data handling once it is submitted to their APIs.

We’ve also seen impressive results from the Google PaLM2 API through the Vertex AI program. This model is shaping up to be a strong contender, as Google’s terms and conditions provide more favorable stipulations concerning data privacy and usage for further training.

Open-source models also show significant potential. In several instances, our results have rivaled those of commercial counterparts, while in others, they’ve left us scratching our heads. Outputs that are hallucinations or outright gibberish are common, so we're hesitant to put them into production just yet. Nonetheless, the progress observed here is highly encouraging, especially considering the relatively short time elapsed since the release of the first LLaMA-based model.

Using Onna for domain-specific LLM training

There is no denying that preparing data for machine learning training can be time-consuming and costly for both engineering teams building data pipelines and data scientists shaping the training format.

We've already covered the data pipeline aspect, so let's look at how we quickly developed a method to export data from Onna into a format compatible with Vertex AI.

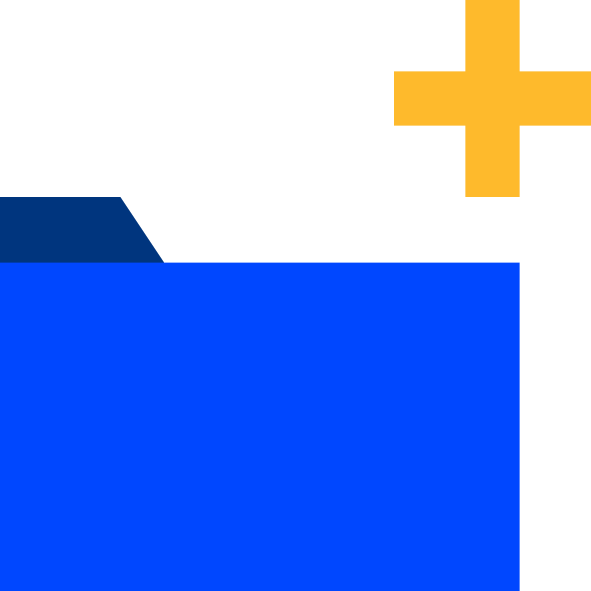

Suppose we're handling a classification task where a customer needs to build a training set consisting of numerous label:text pairs. This dataset will be used to train a model that can classify unseen documents.

Using Onna, the entire workflow can be accomplished swiftly as follows:

- For each desired label, the user runs searches using a combination of search terms to narrow down relevant documents. They have the option to either apply mass tagging to all search results or individually review and tag each document with the appropriate label.

- Execute a search using the above tags as filters to retrieve only the tagged documents.

- Export the search results using the jsonl export feature.

In this example, Vertex AI expects the dataset format to look like this:

In Onna, the user creates an export with an export_schema setting to map the tag and extracted_text fields from Onna to Vertex AI.

In this simplified example, we create an export, resulting in a JSONL file that is fully compliant for training a Vertex AI model. This file is then ready to be used for any business purpose required by the client.

Our path forward

What does all of this mean for Onna’s future? We’ve learned a lot from our experimentation, experiencing definite successes as well as identifying opportunities for improvement. Along the way, we’ve discovered various use cases that will enhance the experience within the Onna platform. These include semantic search, natural language query processing, smart actions, cross-data source summarization, among others. We will keep our blog updated with our progress as we continue.

We also realized that we can offer these same advantages to our customers as they develop their own AI applications. Our goal is to build tooling that allows our customers to leverage the enterprise data they have in Onna to power their use cases, requiring minimal investment on the data side.

And while the technology in areas such as language models, search, and natural language processing is not entirely new, innovation and advancements are currently happening at a rapid pace. The Onna R&D team will continue to invest in this field, both from a learning and delivery standpoint. We will also partner with customers and vendors who have expressed interest in this field and the capabilities we have already demonstrated.

There’s still quite a journey ahead of us, but personally, I’m excited to explore where it takes us and the new possibilities we unlock.

If your company is interested in building domain-specific LLMs and is considering how to tackle data preparation, please get in touch.

eDiscovery

eDiscovery Collections

Collections Processing

Processing Early Case Assessment

Early Case Assessment Information Governance

Information Governance Data Migration

Data Migration Data Archiving

Data Archiving Platform Services

Platform Services Connectors

Connectors Platform API

Platform API Pricing Plans

Pricing Plans Professional Services

Professional Services Technical Support

Technical Support Partnerships

Partnerships About us

About us Careers

Careers Newsroom

Newsroom Reveal

Reveal Logikcull by Reveal

Logikcull by Reveal Events

Events Webinars

Webinars OnnAcademy

OnnAcademy Blog

Blog Content Library

Content Library Trust Center

Trust Center Developer Hub

Developer Hub